Overview

The Quiz Platform provides a comprehensive set of assessment tools aimed at enhancing student learning experiences and simplifying operations for teachers and administrators. It features a modular approach to assessment creation, student assignment, and a seamless, unified student experience.

Challenges Leading to the Development of the Quiz Platform:

The creation of the Quiz Platform addressed several pressing challenges that emerged from a fragmented and inefficient assessment ecosystem:

- Acquisition of Companies with Different Assessment Platforms: The consolidation of multiple companies, each with its own platform, resulted in disparate systems, inconsistent workflows, and significant integration challenges.

- Non-Standard and Buggy Student Experience: The lack of a unified user interface led to inconsistent experiences, frequent errors, and confusion, ultimately impacting student engagement and satisfaction.

- Large Maintenance Team and High Operational Overhead: Maintaining multiple platforms required a sizable support team, leading to increased costs, duplicated efforts, and operational inefficiencies.

- Non-Scalable Architecture: Existing systems struggled to handle growing user volumes and new feature integrations, limiting their ability to scale with the organisation’s expanding needs.

- Challenges in Adding New Question Types: The rigid architecture of legacy platforms made it difficult to introduce innovative question formats, limiting assessment variety and effectiveness.

- Difficulty in Onboarding Internal and External Users: Complex onboarding processes, lack of standardized workflows, and poor documentation created barriers for both internal teams and external partners.

- Lack of Version Management and Content Tracking: The absence of proper version control and tracking mechanisms caused confusion over which quiz versions were live, increasing the risk of content errors and delivery issues.

The Problem

In addition to the challenges, these critical problems underscored the need for a unified platform

- Lack of a Common Platform: The absence of a unified solution for authoring, delivering, and tracking assessments resulted in inefficiencies. Question authors and assessment creators had to undergo retraining each time they were onboarded to a new platform, increasing time and resource overhead.

- Reusability of Questions: There was no shared repository of questions available for reference when creating quizzes for different organisations. Since marking schemes were tied directly to individual questions, any changes to the marking scheme for a different quiz required duplicating the questions. This resulted in significant question duplication, reducing the actual number of unique questions in the question bank to just 10 to 20% of the estimated total.

- Test Configurations: The platform lacked flexibility for customizing assessment delivery, limiting innovation in quiz formats. As a result, most companies refrained from experimenting with their assessments, resulting in standardized, fixed-format quizzes across organisations.

- Poor Test Interface Replication: Inconsistent test experiences across different courses caused confusion for students. Additionally, some assessments required interfaces resembling government-led examination formats to ensure familiarity and compliance.

- Question Bank Silos: The inability to share content across departments or platforms created inefficiencies. Each company had its own preferred parameters for questions, making integration complex and requiring significant migration efforts.

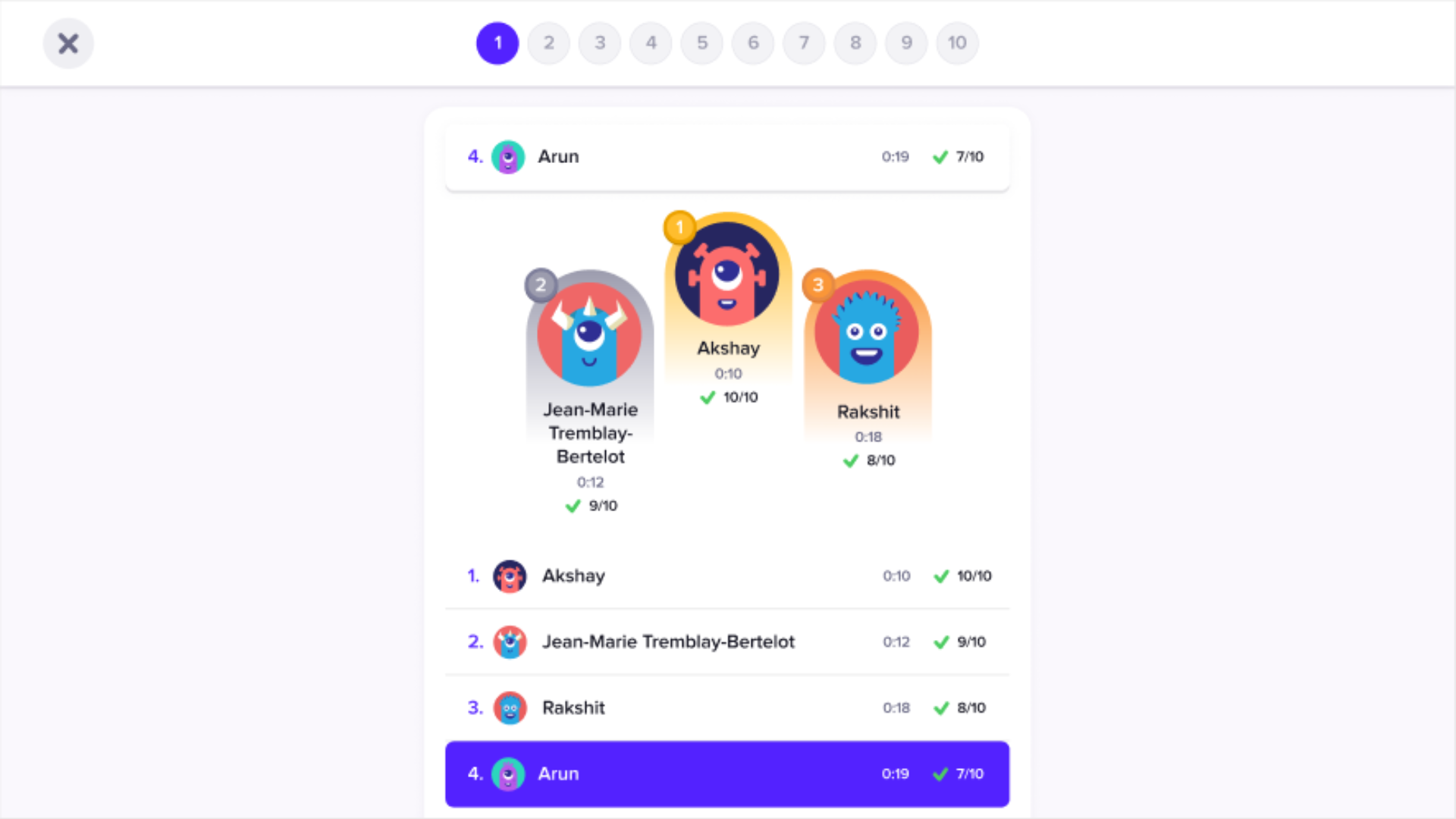

- Limited Engagement in Learning: Absence of interactive features like live quizzes, leaderboards, and real-time feedback reduced student participation.

- Poor Student Experience: Fragmented user experiences with disjointed platforms for assignments, submissions, and results.

- Complex Classroom Management: Teachers struggled to manage in-class assessments and live responses due to the inflexibility of the live quiz system, which lacked adaptability for new features and enhancements.

- Lack of Multi-Language Support: The absence of granular language options created barriers for regional and non-English-speaking users. Most platforms supported language selection only at the assessment level, rather than at the individual question level. As a result, multiple versions of the same question had to be created in different languages, leading to redundancy and inefficiencies.

Our Solution

The Quiz Platform was designed to overcome these challenges by providing a comprehensive suite of tools for efficient assessment creation, delivery, and analysis. Built as a multi-client, multi-tenant system, the platform ensures seamless usability across multiple organisations, enabling scalable and customizable assessment solutions.

The platform had 3 Major pieces in order to provide a unified experience during test creation, test taking and the test correction (for subjective questions).

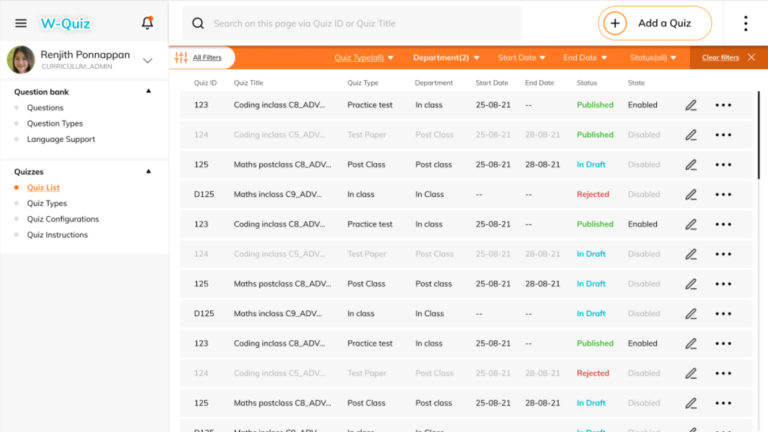

Test Creation Interface

The Test Creation Interface was built to empower the authoring team with comprehensive tools for managing every aspect of assessment development. It facilitated the creation of questions, assessments, and configurations, along with drafting instructions, terms, and conditions. Beyond content creation, the interface also served as a user management tool, enabling the assignment of tests, management of user access (such as adding or removing users), and adjustment of attempt limits.

Additionally, the interface functioned as a centralised question bank, allowing the ingestion of questions from existing repositories for easy reuse and seamless integration into new assessments. This streamlined approach not only enhanced efficiency but also promoted consistency across assessments.

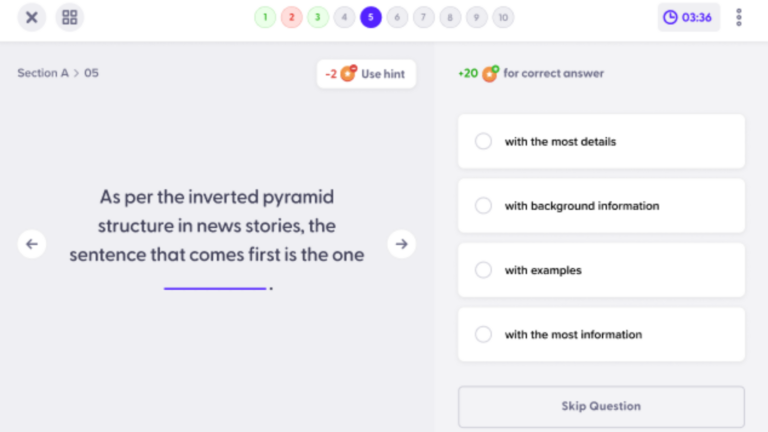

Test Taker SDK

To deliver a seamless test-taking experience, we developed a Test Taker SDK that clients could easily integrate into their web or mobile applications. Clients only needed to invoke the SDK using the provided quiz ID, with the entire quiz experience managed by the SDK. This eliminated the need for clients to invest additional effort in ensuring a smooth and consistent student experience.

The SDK was meticulously optimised for both size and performance, ensuring it had minimal impact on the client application’s footprint while maintaining high-speed performance. The SDK was supported on Web, Android and iOS Platforms.

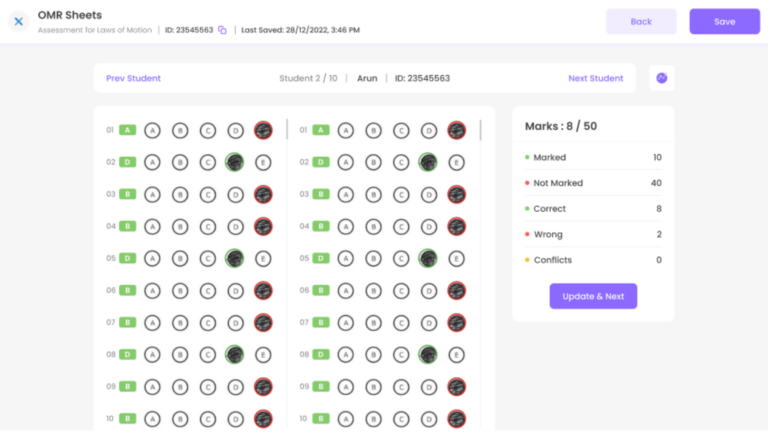

Teacher Correction SDK

The Teacher Correction SDK was designed to streamline the subjective answer evaluation process, enabling seamless integration into the client’s web or mobile platforms. By invoking the SDK with the test ID, teachers could efficiently review, score, and provide feedback on student responses without needing additional tools or interfaces.

The SDK was built with performance and usability in mind, ensuring a responsive and intuitive experience for teachers while maintaining a lightweight footprint within the client application. Additionally, it supported comments, hand written notes and partial scoring to support subjective assessments.

Deployment and Client Onboarding

Our platform development strategy was driven by a client-centric onboarding approach, ensuring that every feature built addressed real client needs while remaining scalable for future use.

- Client-Driven Feature Development: We prioritised platform features based on specific client requirements. Each onboarding cycle involved selecting a client, developing the necessary features in a scalable manner, and then onboarding them. This iterative approach ensured that the platform was thoroughly tested and refined before expanding to additional clients.

- Continuous Improvement Across Clients: Enhancements made during the onboarding of new clients were automatically rolled out to existing clients, ensuring that all users benefitted from the latest platform improvements.

- Seamless Data and Application Migration: The assessment team handled end-to-end data and application migration, simplifying the transition process for clients and minimising downtime.

- Scalable and Tested Platform: By adopting a client-by-client onboarding approach, the platform was incrementally stress-tested in real-world conditions, ensuring high reliability and scalability as the client base expanded.

This approach not only accelerated client onboarding but also fostered continuous platform improvement, driving higher client satisfaction and operational efficiency.

Impact of the New Assessment Platform

- 🚀 High Assessment Volume: Over 500,000 assessments successfully completed on the platform every month, demonstrating its scalability and reliability.

- 🧑💻 Seamless Live Assessments: The platform efficiently managed 7,000 concurrent live users, ensuring a smooth and stable experience during large-scale assessments.

- 📝 Accelerated Subjective Evaluation: The time required for subjective assessment corrections was slashed by 80%, significantly improving efficiency for educators.

- 🌐 Enhanced Question Reusability: Implementing multi-lingual support at the most granular level reduced duplicate questions by 80%, enabling faster localisation and broader reach.

- 💡 Frictionless Onboarding: The platform’s intuitive design meant that most authoring teams onboarded required minimal to no training, reducing ramp-up time.

- 🤝 Efficient Issue Resolution: Common issues and concerns were addressed swiftly through just a couple of Q&A sessions, highlighting the platform’s user-friendly design and robust support.

- 📊 Operational Efficiency Gains: The streamlined assessment creation, delivery, and correction processes contributed to a significant reduction in operational overhead for client organizations.

- 💯 Consistent Performance: The platform maintained high uptime and low latency, ensuring a reliable experience even during peak usage.

No comment yet, add your voice below!